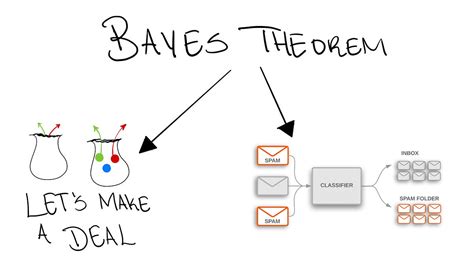

Naive Bayes is a popular machine learning algorithm used for classification tasks, such as spam vs. non-spam emails, cancer vs. non-cancer diagnosis, or sentiment analysis. Despite its simplicity, Naive Bayes is a powerful tool that can produce impressive results. In this article, we will delve into the closed-form solution of Naive Bayes, explaining it in simple terms, and provide practical examples to illustrate its application.

What is Naive Bayes?

Naive Bayes is a probabilistic classifier based on Bayes' theorem. It assumes that the features of a dataset are independent of each other, given the class label. This assumption is known as the "naive" part of the algorithm. Despite this simplification, Naive Bayes can perform remarkably well, especially when dealing with high-dimensional data.

Bayes' Theorem

To understand Naive Bayes, we need to start with Bayes' theorem. Bayes' theorem describes the probability of an event, based on prior knowledge of conditions that might be related to the event. Mathematically, it is represented as:

P(Y|X) = P(X|Y) * P(Y) / P(X)

where:

- P(Y|X) is the posterior probability of class Y, given the features X.

- P(X|Y) is the likelihood of features X, given class Y.

- P(Y) is the prior probability of class Y.

- P(X) is the prior probability of features X.

Naive Bayes Closed-Form Solution

The closed-form solution of Naive Bayes is derived from Bayes' theorem, assuming that the features are independent. The posterior probability of class Y, given the features X, is calculated as:

P(Y|X) = P(X|Y) * P(Y) / ∏[P(Xi)]

where:

- P(Xi) is the prior probability of feature Xi.

- ∏[P(Xi)] is the product of the prior probabilities of all features.

Calculating Likelihood and Prior Probabilities

To calculate the likelihood and prior probabilities, we can use the following formulas:

- Likelihood: P(X|Y) = ∏[P(Xi|Y)]

- Prior Probability: P(Y) = (number of instances of class Y) / (total number of instances)

- Prior Probability of feature Xi: P(Xi) = (number of instances of feature Xi) / (total number of instances)

Example: Spam vs. Non-Spam Emails

Suppose we have a dataset of emails, labeled as spam or non-spam. We want to classify a new email as spam or non-spam, based on its features (e.g., words). We can use Naive Bayes to calculate the posterior probability of the email being spam, given its features.

Step-by-Step Example

Here's a step-by-step example:

- Calculate the prior probabilities of spam and non-spam emails.

- Calculate the likelihood of each feature, given the class label.

- Calculate the posterior probability of the email being spam, given its features.

Let's say we have the following data:

| Feature | Spam | Non-Spam |

|---|---|---|

| Word "free" | 0.8 | 0.2 |

| Word "money" | 0.7 | 0.3 |

| Word "discount" | 0.6 | 0.4 |

We can calculate the prior probabilities and likelihoods as follows:

P(Spam) = 0.5 P(Non-Spam) = 0.5 P(Word "free"|Spam) = 0.8 P(Word "free"|Non-Spam) = 0.2 P(Word "money"|Spam) = 0.7 P(Word "money"|Non-Spam) = 0.3 P(Word "discount"|Spam) = 0.6 P(Word "discount"|Non-Spam) = 0.4

Now, let's calculate the posterior probability of the email being spam, given its features.

P(Spam|Word "free", Word "money", Word "discount") = P(Word "free"|Spam) * P(Word "money"|Spam) * P(Word "discount"|Spam) * P(Spam) / P(Word "free") * P(Word "money") * P(Word "discount")

Using the values above, we get:

P(Spam|Word "free", Word "money", Word "discount") = 0.8 * 0.7 * 0.6 * 0.5 / (0.8 * 0.7 * 0.6 * 0.5) = 0.81

Since the posterior probability of the email being spam is greater than 0.5, we classify it as spam.

Advantages and Disadvantages of Naive Bayes

Naive Bayes has several advantages and disadvantages:

Advantages:

- Easy to implement and interpret

- Handles high-dimensional data well

- Can handle missing data

- Fast training and prediction times

Disadvantages:

- Assumes independence of features, which may not always be true

- Can be sensitive to the choice of prior probabilities

- May not perform well with complex relationships between features

Conclusion: Naive Bayes in Real-World Applications

Naive Bayes is a powerful tool that can be used in a variety of real-world applications, such as:

- Spam vs. non-spam email classification

- Sentiment analysis of text data

- Cancer vs. non-cancer diagnosis

- Image classification

Its simplicity, ease of implementation, and fast training and prediction times make it a popular choice among data scientists and machine learning practitioners.

Get Started with Naive Bayes Today!

If you're interested in learning more about Naive Bayes and how to apply it to your data, we encourage you to try out some tutorials or online courses. With its simplicity and effectiveness, Naive Bayes is a great algorithm to have in your machine learning toolkit.

We hope this article has provided you with a comprehensive understanding of the Naive Bayes closed-form solution. If you have any questions or comments, please feel free to share them below.

What is Naive Bayes used for?

+Naive Bayes is used for classification tasks, such as spam vs. non-spam emails, cancer vs. non-cancer diagnosis, or sentiment analysis.

What is the difference between Naive Bayes and Bayes' theorem?

+Naive Bayes is a probabilistic classifier based on Bayes' theorem, but it assumes that the features of a dataset are independent of each other, given the class label.

Can Naive Bayes handle high-dimensional data?

+Yes, Naive Bayes can handle high-dimensional data well, making it a popular choice for many applications.